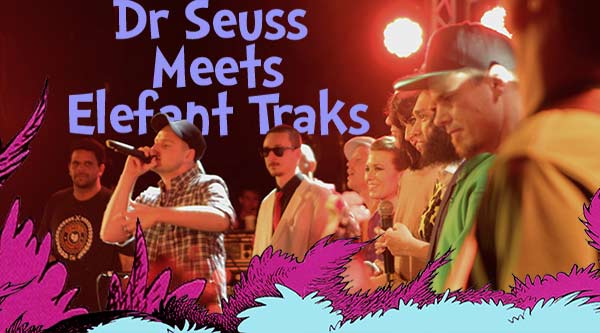

Jean Poole is a video director based in Melbourne, Australia.

– Directing / editing

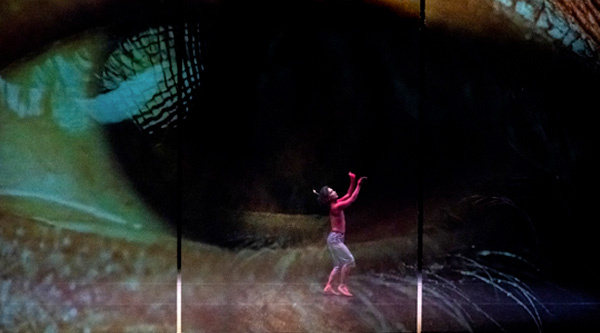

– Concert + Theatre Visuals

– Music Videos

– Cinematography

– Animation + motion design

– Post production – colour grading + visual effects

– Events+ installations / live operation + projection mapping